I have been a resident of Holmdel since 2017. Ever since we came here, I have seen our schools underperform. When I reached out to the BOE and its current members, I did not receive a constructive response to work together to improve what I saw as issues.

I am a little concerned that so far, we have been playing a game of musical chairs. For example, Dr McGarry, who was the previous superintendent, was the one driving the Holmdel 2020 initiative. We took his word for it and voted in favor of this investment. He left shortly after without an explanation to the community or providing a status on how the project implementation was going. Now some of the current board members say that this was before they were elected to avoid accountability.

The way I see this – The school board has a fiduciary duty to the town’s residents. Even if this was before a board member’s time, I expect the board member to have made a determination on whether the project in question was on track and was achieving its objectives when they came onboard. If it was, great! If it wasn’t, then the board needs to course correct and report back to the community on what was missing and how things were going to be brought back on track. Saying it was before your time should never be acceptable!

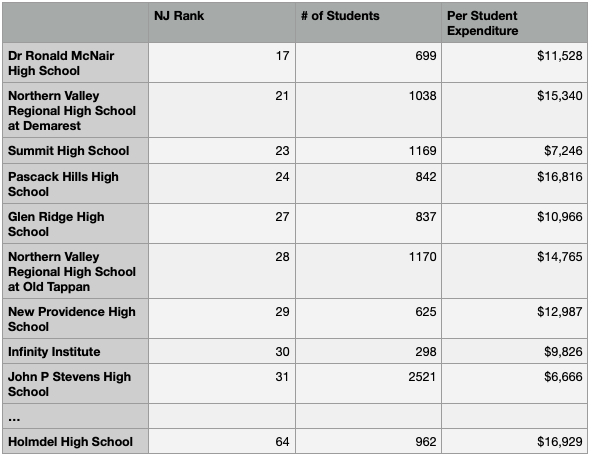

As you can tell from the following stats, our dollars do not go as far in improving the quality of education or rankings as other school districts. I have not included the vocational school districts in this comparison, so that we are comparing our school to similar suburban high schools fairly. We seem to spend more money per student, but are still ranked lower than a lot of other public high schools.

Data from: https://www.schooldigger.com/go/NJ/schoolrank.aspx?level=3

I will not belabor the point by listing every school that is above us here, but I assume you get the point.

The Holmdel 2020 Initiative:

I understand that we had not invested in school infrastructure upgrades for a while, and it’s justified to invest in giving our kids better facilities. But we should also be interested in tracking the ROI on our investment of $40M in this project. How much of these funds went into improving the metrics that drive our rankings? And having made this investment, the least that the community should expect is for the board to share how these metrics have trended quarter over quarter, so we have the confidence that the quality of education is improving and so will our rankings in the future. Anyone who has asked the town to make an investment in the school makes the case that improved rankings will drive up home values and therefore result in better returns for tax payers. We haven’t seen any such improvements in Holmdel yet!

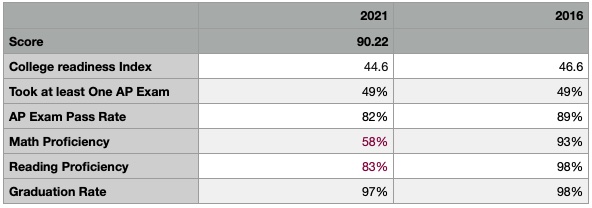

Holmdel Metrics from US News and World Report High – School Evaluation Criteria:

These are the metrics that drive our US News rankings and here’s our current evaluation on each metric:

Source:

Current:

2016:

As you can see, there is a precipitous drop in Math proficiency since 2016. The reading proficiency has also fallen in this period. The obvious question to ask here is, have we lost a number of our good teachers? Each of us has anecdotally heard of teachers leaving or retiring. It would certainly make sense for the board to share these attrition statistics. Also, when filling these positions, do we strive to maintain the same level of seniority/expertise when we hire replacement teachers? Are our kids getting as good of an education as before?

Another argument that is used to explain away similar performance drops is that we lose a lot of students to the county’s vocational school district schools. That is not really relevant here to explain this drop since the vocational schools existed in 2016 as well, and were roughly pulling in a similar cohort from the high school.

We have had an interim superintendent for more than a year. I very much like Dr. Seitz and I believe we are doing him a disservice by keeping this position temporary. I would like to know whether the board has confidence in him? If it does, please confirm this as a permanent position or if it does not, please begin a search for a permanent superintendent so that we can have accountability. Again, I am against playing musical chairs where no one takes responsibility.

One final item that is often mentioned by our current board members is that we were the platinum standard for school reopening. Before coming to that conclusion, I would like to see the studies that the board has reviewed (preferably double blind) before making investments into “UV-C lighting, bi-polar ionization filtration units, antimicrobial coatings on commonly used surfaces, retro-commissioned the District HVAC systems”. Lack of having a cluster of cases does not indicate that these measures are the ones responsible for this (correlation does not imply causation). As you realize, a number of us chose to keep our kids home last year. Before the start of this school year we had vaccines available to our kids 12 years or older. How do we know that these factors have not had an effect in preventing clusters of infections as did our investments into infrastructure improvements? Attributing it to a single factor is not supportable without appropriate studies.

I prefer using data to come to meaningful conclusions, because if you cannot measure it, you cannot improve it. And I haven’t seen meaningful metrics being tracked by the board or shared with us.

That said, I believe that it is time to bring change and new blood to the school board, so that it can be more receptive to our concerns about getting our schools to prepare our kids for a much more competitive future. My advice to the parents in Holmdel – please talk to each of the candidates to make up your mind on who can/will bring about this change. You should specifically be asking each of them what changes they intend to bring about, and what metrics will they track/measure regarding these changes so that we as a community can determine if we are on track and how will they transparently share these metrics at each BOE meeting over their term.

We owe this to our children!