Trends in Information Dissemination: Speed of Information Transmission is increasing at a very rapid pace and veracity (the source, the “Truthiness” of the information (thank you Stephen Colbert!), or if it has been tampered with en-route) has not .

Initially information travelled as fast as humans could travel – A courier would take a document or a piece of information and use a fast horse or chariot to convey it from one location to another. Then humans invented other modes of transmission of signals – carrier pigeons, smoke signals, sound/light transmissions within line of sight and then we moved on to using an electric current (telegraph, telephone) and eventually using light or or radio or microwave, VHF, UHF transmissions. We also learnt how to use satellites for communication when the curvature of the earth’s surface got in the way of line of sight transmission. Mostly this was point to point with respected agents in the middle establishing the veracity of the information – like newspapers, radio stations, tv stations etc.

Meanwhile in the early part of this century, we had the advent of a number of social networks like Facebook, LinkedIn, Twitter, SnapChat, YouTube, MySpace etc. These networks connected individuals into social networks and facilitated an extreme agility in information transmission. Think how easy it is to share an update or a personal opinion on these networks. It has developed to such an extent that it is possible to rapidly disseminate false news with real consequences.

Now it has become possible for anyone to act as an agent and share any content however authentic or inauthentic with his network. In a highly interconnected social network, this results in sacrificing veracity.

Take for example this specific site – focusnews.us, that very often shows up on my Facebook feed.

This site usually runs incendiary stories with provocative headlines like the following (I have only attached news items that showed up on my Facebook feed today because someone in my network liked it, or shared it or commented on it). Here’s a commentary of someone’s personal opinion disguised as news and sensational clickbait:

And having a few catalysts in any social network – implies a news story can be disseminated very easily without the usual veracity requirements as a traditional news organization.

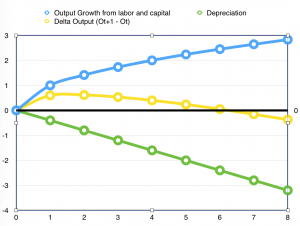

The effect of rapid information dissemination and network amplification that helps false news propagate and have a much more profound effect on public perception.

I looked at their website to understand if they had any journalistic credentials. I found none – no names for the management team, the editor or any journalistic team. The Contact Us section provided an anonymous email address (world@focusnews.us) that did nothing to reassure me of their credentials. So I am assuming this is a clickbait operation, with a goal to generate ad revenues by posting sensational stories on social networks and getting people to click on them. But I haven’t seen any ads on their site – so what should I assume? Is this a disinformation operation that is being fed to a gullible american electorate who loves their conspiracy theories!

When I looked up the internet registration record for this site, it appears to be registered in Macedonia and regularly publishing unverified US news.

RAW WHOIS DATA

Domain Name: FOCUSNEWS.US

Domain ID: D55884931-US

Sponsoring Registrar: GODADDY.COM, INC.

Sponsoring Registrar IANA ID: 146

Registrar URL (registration services): whois.godaddy.com

Domain Status: clientDeleteProhibited

Domain Status: clientRenewProhibited

Domain Status: clientTransferProhibited

Domain Status: clientUpdateProhibited

Variant: FOCUSNEWS.US

Registrant ID: CR255879236

Registrant Name: ivan stankovic

Registrant Address1: JNA 18

Registrant City: kumanovo

Registrant State/Province: macedonia

Registrant Postal Code: 1300

Registrant Country: MACEDONIA, THE FORMER YUGOSLAV REPUBLIC OF

Registrant Country Code: MK

Registrant Phone Number: xxxxxxxxxxxxxxxxxxxx

Registrant Email: @gmail.com

Registrant Application Purpose: P3

Registrant Nexus Category: C11

Administrative Contact ID: CR255879238

Administrative Contact Name: ivan stankovic

Administrative Contact Address1: JNA 18

Administrative Contact City: kumanovo

Administrative Contact State/Province: macedonia

Administrative Contact Postal Code: 1300

Administrative Contact Country: MACEDONIA, THE FORMER YUGOSLAV REPUBLIC OF

Administrative Contact Country Code: MK

Administrative Contact Phone Number: xxxxxxxxxxxxxxxxxx

Administrative Contact Email: @gmail.com

Administrative Application Purpose: P3

Administrative Nexus Category: C11

Billing Contact ID: CR255879239

Billing Contact Name: ivan stankovic

Billing Contact Address1: JNA 18

Billing Contact City: kumanovo

Billing Contact State/Province: macedonia

Billing Contact Postal Code: 1300

Billing Contact Country: MACEDONIA, THE FORMER YUGOSLAV REPUBLIC OF

Billing Contact Country Code: MK

Billing Contact Phone Number: xxxxxxxxxxxxxx

Billing Contact Email: @gmail.com

Billing Application Purpose: P3

Billing Nexus Category: C11

Technical Contact ID: CR255879237

Technical Contact Name: ivan stankovic

Technical Contact Address1: JNA 18

Technical Contact City: kumanovo

Technical Contact State/Province: macedonia

Technical Contact Postal Code: 1300

Technical Contact Country: MACEDONIA, THE FORMER YUGOSLAV REPUBLIC OF

Technical Contact Country Code: MK

Technical Contact Phone Number: xxxxxxxxxxxxxxxx

Technical Contact Email: @gmail.com

Technical Application Purpose: P3

Technical Nexus Category: C11

Name Server: NS1.FOCUSNEWS.US

Name Server: NS2.FOCUSNEWS.US

Created by Registrar: GODADDY.COM, INC.

Last Updated by Registrar: GODADDY.COM, INC.

Domain Registration Date: Fri Oct 21 22:24:17 GMT 2016

Domain Expiration Date: Fri Oct 20 23:59:59 GMT 2017

Domain Last Updated Date: Mon Oct 31 15:37:59 GMT 2016

DNSSEC: false

If people are gullible enough to fall for this clickbait and generate traffic for this website, there is nothing wrong with this – this lets them push advertising based on site traffic and a couple of kids making money off this,it is a perfect example of capitalism.

And who knows if they are also being paid by any foreign government to carry out a disinformation campaign – oh wait – thats not true – right – we just heard from Putin that they would never do so…so obviously this line of reasoning is wrong…

And if my fellow Americans are gullible enough to fall for these clickbaits and provide these entrepreneurs with a steady revenue stream – so be it. But let’s not call it news anymore….

Do also check out this report on how fake news works (from media matters.org) –

the internet, the GPS system, antibiotics and miracle drugs we have on the market. All of these are innovations that started as government projects and were then handed over to private enterprise.

the internet, the GPS system, antibiotics and miracle drugs we have on the market. All of these are innovations that started as government projects and were then handed over to private enterprise.

happens daily across borders. This brave new world that was entered after the war is caused by a process called globalization. Globalization, according to Wikipedia, “is the action or procedure of international integration arising from the interchange of world views, products, ideas, and other aspects of culture.” Globalization has changed the world for the better, and it should become the standard for humanity. Its benefits include, but are not limited to, per capita and revenue growth, the spread of democracy through the developing world, and a general interconnectedness between the human species brought about by the social, technological, and ideological exchanges across the world.

happens daily across borders. This brave new world that was entered after the war is caused by a process called globalization. Globalization, according to Wikipedia, “is the action or procedure of international integration arising from the interchange of world views, products, ideas, and other aspects of culture.” Globalization has changed the world for the better, and it should become the standard for humanity. Its benefits include, but are not limited to, per capita and revenue growth, the spread of democracy through the developing world, and a general interconnectedness between the human species brought about by the social, technological, and ideological exchanges across the world. were once limited to operations in very few countries were able to create jobs where labor was cheapest, resulting in more employment, and a rise in standard of living in poor countries, like China. China’s economy is a prime example of the economic benefits that globalization has produced. China has become a hub for manufacturing, with companies like Apple producing most of their products there. Since 1990, GDP per capita increased exponentially, going from USD 317.885 in 1990 to USD 8,027.684 in 2015. Higher individual wages also correlated with a higher total GDP. GDP in China went from 360.859 billion USD in 1990 to USD 11.008 trillion in 2015. This huge increase in economic value is thanks to the countless companies that have taken advantage of China’s booming population and workforce. This in turn raised incomes for the majority of the Chinese populace. Standards of living have been going up, and outbound tourism from China has been steadily increasing, from 4 million people in 1999 to 50 million people in 2015. China is just one example of the profound effects globalization on a population. As the winds of globalization inevitably sweep across the world, many developing countries will turn in to booming, prosperous nations. According to the Peterson Institute of International Economics, “A sophisticated model predicts that

were once limited to operations in very few countries were able to create jobs where labor was cheapest, resulting in more employment, and a rise in standard of living in poor countries, like China. China’s economy is a prime example of the economic benefits that globalization has produced. China has become a hub for manufacturing, with companies like Apple producing most of their products there. Since 1990, GDP per capita increased exponentially, going from USD 317.885 in 1990 to USD 8,027.684 in 2015. Higher individual wages also correlated with a higher total GDP. GDP in China went from 360.859 billion USD in 1990 to USD 11.008 trillion in 2015. This huge increase in economic value is thanks to the countless companies that have taken advantage of China’s booming population and workforce. This in turn raised incomes for the majority of the Chinese populace. Standards of living have been going up, and outbound tourism from China has been steadily increasing, from 4 million people in 1999 to 50 million people in 2015. China is just one example of the profound effects globalization on a population. As the winds of globalization inevitably sweep across the world, many developing countries will turn in to booming, prosperous nations. According to the Peterson Institute of International Economics, “A sophisticated model predicts that  global free trade, removing all post-Uruguay Round barriers, would lift world income by $1.9 trillion ($375 billion for Japan, $512 billion for EU and EFTA, $537 billion for the US, $371 billion for developing countries, $62 billion for Canada, Australia, New Zealand).” This “global free trade”, brought about by trade deals like NAFTA and TPP, will enable labor to spread around the world and increase incomes across the board for citizens of the globe. However, globalization has come under fire from many people, including US President Donald Trump. His entire campaign was directed against globalization, claiming it took away jobs from “ordinary, hardworking Americans”. It is true, that free trade drives companies to shuffle jobs around different countries, potentially leaving some people behind. The key to solving this issue is adaptability. Globalization hastens the pace of innovation across industries, thanks in no small part to the input of ideas from around the globe. New innovations can create new avenues for moneymaking, thus creating newer jobs in the places that lost them. To maximize global revenue, trade deals between nations should make it easier for companies to move across borders, all while keeping a balance of power between people, corporations, and governments. In fact, trade deals like these should include councils of representatives from corporations, governments, and unions, enabling openness and transparency in all facets of the economy.

global free trade, removing all post-Uruguay Round barriers, would lift world income by $1.9 trillion ($375 billion for Japan, $512 billion for EU and EFTA, $537 billion for the US, $371 billion for developing countries, $62 billion for Canada, Australia, New Zealand).” This “global free trade”, brought about by trade deals like NAFTA and TPP, will enable labor to spread around the world and increase incomes across the board for citizens of the globe. However, globalization has come under fire from many people, including US President Donald Trump. His entire campaign was directed against globalization, claiming it took away jobs from “ordinary, hardworking Americans”. It is true, that free trade drives companies to shuffle jobs around different countries, potentially leaving some people behind. The key to solving this issue is adaptability. Globalization hastens the pace of innovation across industries, thanks in no small part to the input of ideas from around the globe. New innovations can create new avenues for moneymaking, thus creating newer jobs in the places that lost them. To maximize global revenue, trade deals between nations should make it easier for companies to move across borders, all while keeping a balance of power between people, corporations, and governments. In fact, trade deals like these should include councils of representatives from corporations, governments, and unions, enabling openness and transparency in all facets of the economy.  If such a system is implemented on a global scale, the prosperity of the human race will soar.

If such a system is implemented on a global scale, the prosperity of the human race will soar.

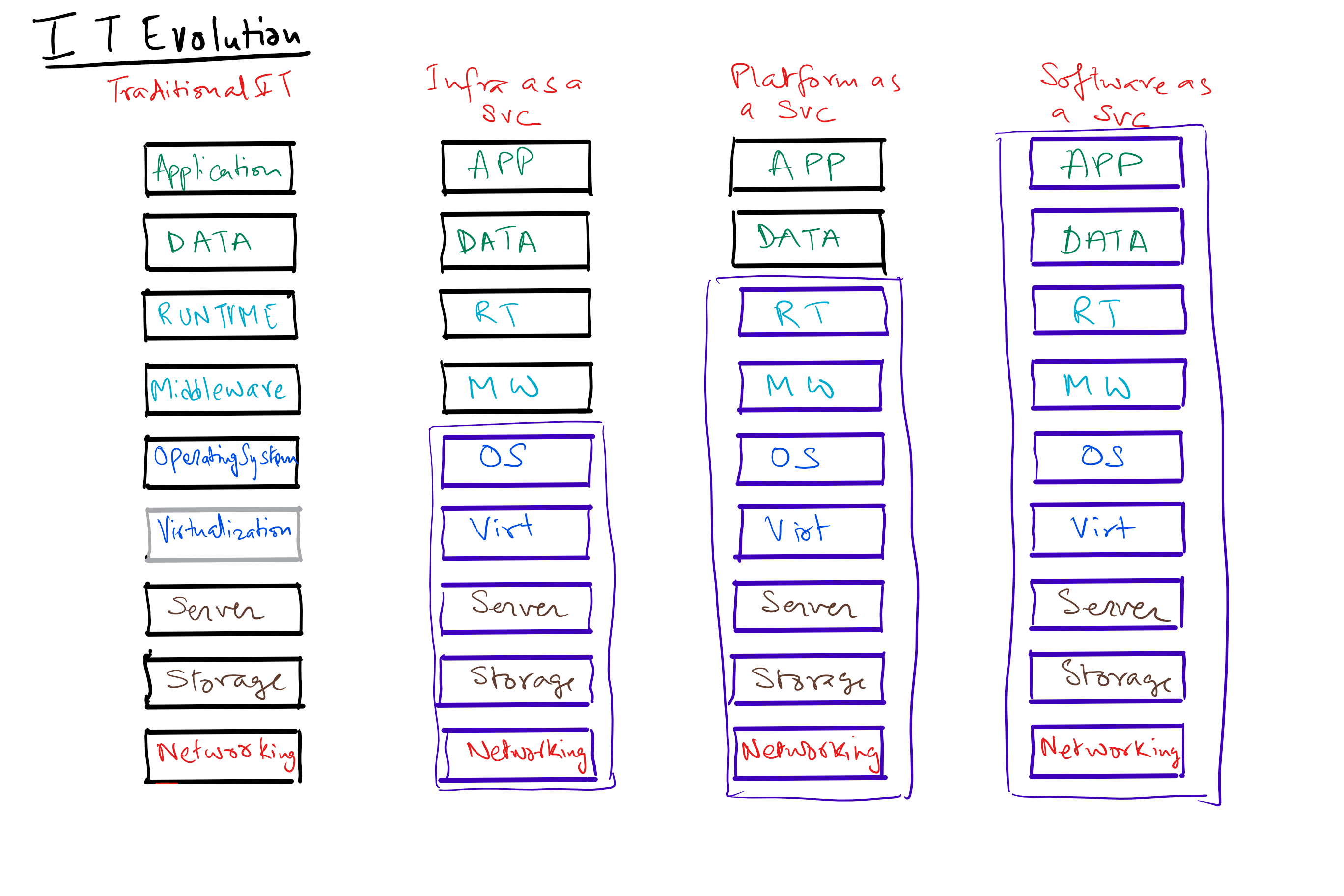

It is increasingly apparent that technology has become a large driver of business strategy. By providing new channels and mechanisms to interact with its customers, clients, partners and suppliers, technology is a key part of an organization’s sustainable competitive advantage. So its imperative for a product owner to not just understand the business landscape but also understand how he or she can use technology components as key artifacts in differentiating their offering.

It is increasingly apparent that technology has become a large driver of business strategy. By providing new channels and mechanisms to interact with its customers, clients, partners and suppliers, technology is a key part of an organization’s sustainable competitive advantage. So its imperative for a product owner to not just understand the business landscape but also understand how he or she can use technology components as key artifacts in differentiating their offering. There have been a number of blogs which have focused on requirements that are intuitable, usable, simple and efficient. So I will not cover this aspect here.

There have been a number of blogs which have focused on requirements that are intuitable, usable, simple and efficient. So I will not cover this aspect here. users and their data and this focus is not only to comply with regulations like HIPAA but to stay out of the front page of the Wall Street Journal. Given the

users and their data and this focus is not only to comply with regulations like HIPAA but to stay out of the front page of the Wall Street Journal. Given the

power. These mainly were invented as an academic project which later found application in data crunching within industry. Initially only the largest industries could afford it, and run it. I am sure you would remember the punch card drives and the huge cooling towers around the mainframes!

power. These mainly were invented as an academic project which later found application in data crunching within industry. Initially only the largest industries could afford it, and run it. I am sure you would remember the punch card drives and the huge cooling towers around the mainframes! can be collected, processed and disseminated. Some advances came from the mainstreaming of IT into every business or organization. Initially the target was automation – simple enterprise systems that were automated for e.g. The production, planning, accounting, sales etc. There were a number of different flavors of distributed platforms that had specific appeals to different sets of users – the windows platform that was very popular in business user computing segment, the mac platform that appealed to an individual user with needs for creative art applications and the unix/linux platforms which appealed to the geeks. Eventually as we saw these platforms compete we saw the linux/java stack start to dominate the back end processing at most business enterprises, while the front end remained windows based and Apple made a big dent into the personal computing segment.

can be collected, processed and disseminated. Some advances came from the mainstreaming of IT into every business or organization. Initially the target was automation – simple enterprise systems that were automated for e.g. The production, planning, accounting, sales etc. There were a number of different flavors of distributed platforms that had specific appeals to different sets of users – the windows platform that was very popular in business user computing segment, the mac platform that appealed to an individual user with needs for creative art applications and the unix/linux platforms which appealed to the geeks. Eventually as we saw these platforms compete we saw the linux/java stack start to dominate the back end processing at most business enterprises, while the front end remained windows based and Apple made a big dent into the personal computing segment. forming a connected web, with standardized communication protocols like TCP/IP, HTTP, SMTP etc. This was a huge improvement to the unconnected islands that businesses and users had maintained prior to this. This really improved the velocity of information travel – from copying data to floppy drives and moving from computer to computer, to directly transmitting information from one computer to another when every node became addressable and ready to understand communication over standard protocols.

forming a connected web, with standardized communication protocols like TCP/IP, HTTP, SMTP etc. This was a huge improvement to the unconnected islands that businesses and users had maintained prior to this. This really improved the velocity of information travel – from copying data to floppy drives and moving from computer to computer, to directly transmitting information from one computer to another when every node became addressable and ready to understand communication over standard protocols. processing slices on any physical machine. Computing became fungible and transferable. The idea was if every one was running their own physical servers which were not highly utilized, it would be better to have highly fungible compute and storage slices that could move around virtually to the least busy node, thereby improving efficiency multi folds for our computing and storage hardware.

processing slices on any physical machine. Computing became fungible and transferable. The idea was if every one was running their own physical servers which were not highly utilized, it would be better to have highly fungible compute and storage slices that could move around virtually to the least busy node, thereby improving efficiency multi folds for our computing and storage hardware. this hardware can be embedded in any device or appliance. So a washing machine or a refrigerator may have enough and more computing power as a specialized computer from a few years back, implies each of these devices are capable of producing process data that can be collected and analyzed to measure efficiency or even proactively predict failures or predict trends.

this hardware can be embedded in any device or appliance. So a washing machine or a refrigerator may have enough and more computing power as a specialized computer from a few years back, implies each of these devices are capable of producing process data that can be collected and analyzed to measure efficiency or even proactively predict failures or predict trends. transfer value instead of just information. This came about from a seminal paper by Satoshi Nakamoto and the origins of block chain (more on this in another post).

transfer value instead of just information. This came about from a seminal paper by Satoshi Nakamoto and the origins of block chain (more on this in another post). depend on a central store of value (or authority) to establish the truth. It tackles the double spend problem in a unique and novel way without relying on this central agent. This has spawned applications in various sphere’s like digital currency, money transfer, smart contracts etc. that will definitely change the way we do business.

depend on a central store of value (or authority) to establish the truth. It tackles the double spend problem in a unique and novel way without relying on this central agent. This has spawned applications in various sphere’s like digital currency, money transfer, smart contracts etc. that will definitely change the way we do business. reduce algorithms and its family of peripheral components/applications like HDFS (Hadoop Distributed File System), Hive (interpreter that turns sql into MR code), PIG (scripting language which gets turned into MR jobs), Impala (sql queries for data in an HDFS cluster), Sqoop (convert data from traditional relational DB into an HDFS cluster), Flume (injesting data generated from source or external systems to put on the HDFS cluster), HBase (realtime DB built on top of HDFS), Hue (graphical front end to the cluster), Oozie (workflow tool), Mahout (machine learning library) etc.

reduce algorithms and its family of peripheral components/applications like HDFS (Hadoop Distributed File System), Hive (interpreter that turns sql into MR code), PIG (scripting language which gets turned into MR jobs), Impala (sql queries for data in an HDFS cluster), Sqoop (convert data from traditional relational DB into an HDFS cluster), Flume (injesting data generated from source or external systems to put on the HDFS cluster), HBase (realtime DB built on top of HDFS), Hue (graphical front end to the cluster), Oozie (workflow tool), Mahout (machine learning library) etc. make sense of this. There are a number of tools available that visualize and provide insights into this data and they inherently use a best fit model that is able to fit to existing data as well as provides predictive value to extrapolations of the causative variables.

make sense of this. There are a number of tools available that visualize and provide insights into this data and they inherently use a best fit model that is able to fit to existing data as well as provides predictive value to extrapolations of the causative variables. solve the following categories of problems using various methods (statistics, computational intelligence, machine learning or traditional symbolic AI) to achieve goals like social intelligence, creativity and general intelligence.

solve the following categories of problems using various methods (statistics, computational intelligence, machine learning or traditional symbolic AI) to achieve goals like social intelligence, creativity and general intelligence. e.g. autonomous driving cars, that use a variety of sensors like cameras and radars to collect information about the road and other vehicles and make real time decisions about control of the car.

e.g. autonomous driving cars, that use a variety of sensors like cameras and radars to collect information about the road and other vehicles and make real time decisions about control of the car. with humans for survival? Will this be a symbiotic relationship or a competition for survival? Are we simply a tool in the evolutionary process playing our part in creating a smarter, better and more resilient new being?

with humans for survival? Will this be a symbiotic relationship or a competition for survival? Are we simply a tool in the evolutionary process playing our part in creating a smarter, better and more resilient new being?