With the recent news of numerous data breaches and companies caught with questionable business/technology practices for managing customer data (which may seem to be in breach of public trust); the question that comes to one’s mind is how important is “Trust”? How much should you invest in maintaining trust in a proactive manner and what is the cost of “breach of trust”? How do you recover from a breach? What foundational elements of trust are damaged from such a breach? And borrowing a marketing slogan from the MasterCard Priceless campaign, is it fair to say – “Not having your company’s data breach on the front page of the Wall Street Journal: Priceless”.

Let’s look at some basics…

What is Trust?

A few definitions that I have found most relevant –

Paraphrasing, social psychologist Morton Deutsch:

Trust involves some level of risk, and risk has consequences with payoffs being either beneficial or harmful. These consequences are dependent on the actions of another person and trust is the confidence that you have in the other person, to behave in a manner that is beneficial to you.

Patricia Jenkinson, Professor of Communications at Sacramento City College defines the various overlapping elements of trust as follows –

♦ Intent to do well by others

♦ Character – being sincere, honest and behaving with integrity

♦ Transparency – open in communication with others and not operating with hidden agendas.

♦ Competence / Capacity – ability to do things

♦ Consistency / Reliability – keeping your promises, meeting your obligations

Trust is important for us to feel physically and emotionally safe. With more trust, we can effectively and collaboratively work together towards common goals by sharing resources and ideas. When trust is high, we openly express thoughts, feelings, reactions, opinions, information and ideas. When trust is low, we are evasive, dishonest and inconsiderate.

There are two basic types of trust: Interpersonal with regards to one’s welfare with privileged information and relational commitment and task oriented with its dimensions of ability to do the task and the follow through to finish the task.

Evolution of Trust

Yuval Noah Harari in his book Sapiens, describes “cooperation in large numbers” to be one of the key factors for human success over other species (which were physically stronger and much more adept at surviving the extreme elements of the earth’s environment). Trust allowed us to cooperate in large numbers and collectively gave us the ability to accomplish tasks beyond the capacity of a single individual. Chimpanzees also cooperate, but not in large numbers like humans which limits the capability of the clique.

Trust Platforms

With the advent of the digital age and large virtually connected social networks, our paradigms of digital trust have changed substantially. Rachel Botsman of Oxford University in a series of TED talks describes the transition from hyperconsumption to collaborative consumption, the evolution of trust from local to institutional to distributed.

This evolving distributed trust platform has three foundational layers (described as the Trust Stack by Ms. Botsman) – which allows us to trust relatively unknown people –

♦ Trust the Idea

♦ Trust the Platform

♦ Trust the other user

When there is assurance of accountability for a users’ actions as enforced by the platform (which has the ability to restrict future transactions by that user for bad behavior), there is implicit trust that the platform lends to transactions between complete strangers such as a transaction on the Uber or Air BnB platforms. One of the illustrative examples is how people behave differently (say cleaning up their room) when staying at a hotel vs. with an Air BnB host. In the former the expectation is that the institution will not hold them accountable for bad behavior while in the later the platform enforces this through mutual feedback and social reputation for both the guest and the host enhancing trust and ensuring good behavior.

Per Ms. Botsman, this is just the beginning, because the real disruption happening isn’t technological. It is fundamental to the way we will transact in the future. Once a trust shift has happened around a behavior or an entire sector, you cannot reverse this change. The implications here are huge.

A Simple Experiment

Daniel Arielly, a professor at Duke, in his TedX talk at Jaffa, describes a very nice social experiment. Suppose in a model society, everyone is given $10 at the beginning of the day – if they put this money in the public goods pot, then at the end of the day everything in the pot is multiplied by 5 and equally divided. So for example, if 10 people in a society were given $10 every morning and they put everything in the public goods pot, the pot would have $100, when multiplied by 5, would result in $500 at the end of the day and every one would get $50 back at the end of the day and everyone is happy. If the next day one person cheats, everyone except that person put in the $10 at the beginning of the day, there would be $90 in the pot. At the end of the day, the pot would have $450 and everyone would be returned $45 back. Everyone would notice that they did not get the full $50 back and the person who betrayed the public trust has $55. Dan’s next question was – what would happen the next day – no one would contribute to the public pot. His point being most trust games play out as a prisoners delima with a very unstable equilibrium where everyone contributes/cooperates and a stable equilibrium where no one contributes/cooperates. To maximize overall benefit, one has to ensure that everyone cooperates, and a single defection would ensure the overall benefit from cooperation going down. The moral of the story is that “Trust” is a public good, and an incredible lubricant for society. When we trust, everyone is better off, and when people betray the public trust, the system collapses and we are all worse off.

In Conclusion

A number of companies have used transparency and a persistent reputation as a mechanism to keep people from betraying the public trust for example eBay, Air BnB, Uber etc. The cost of betrayal on these platforms is that the betrayer would not be able to transact on the platform anymore because of a hit to his/her reputation.

Also adding punsihment and revenge to the mix also changes the game. A reputation for being revengeful will prevent the first player defecting. The justice system and police are a common example of using punishment to keep the trust in society.

For companies that build trust platforms that allows for even strangers to transact, a betrayal of trust by the platform is much more damaging than a transgression by a single user on the platform. With such a breach there is the real possibility of users moving to the extremely stable equilibrium of not cooperating & thus abandoning the platform (loosing network scale is an existential threat) and moving them back to cooperating and using the platform is a herculean task.

No longer can we rest on our laurels by just calling ourselves trust worthy without redesigning our systems, process and people to be transparent, inclusive and accountable.

So remember, protect the idea first, then the integrity of the platform and then individual issues or breaches that may impact trust. Once trust is broken, it’s very hard to rebuild or repair.

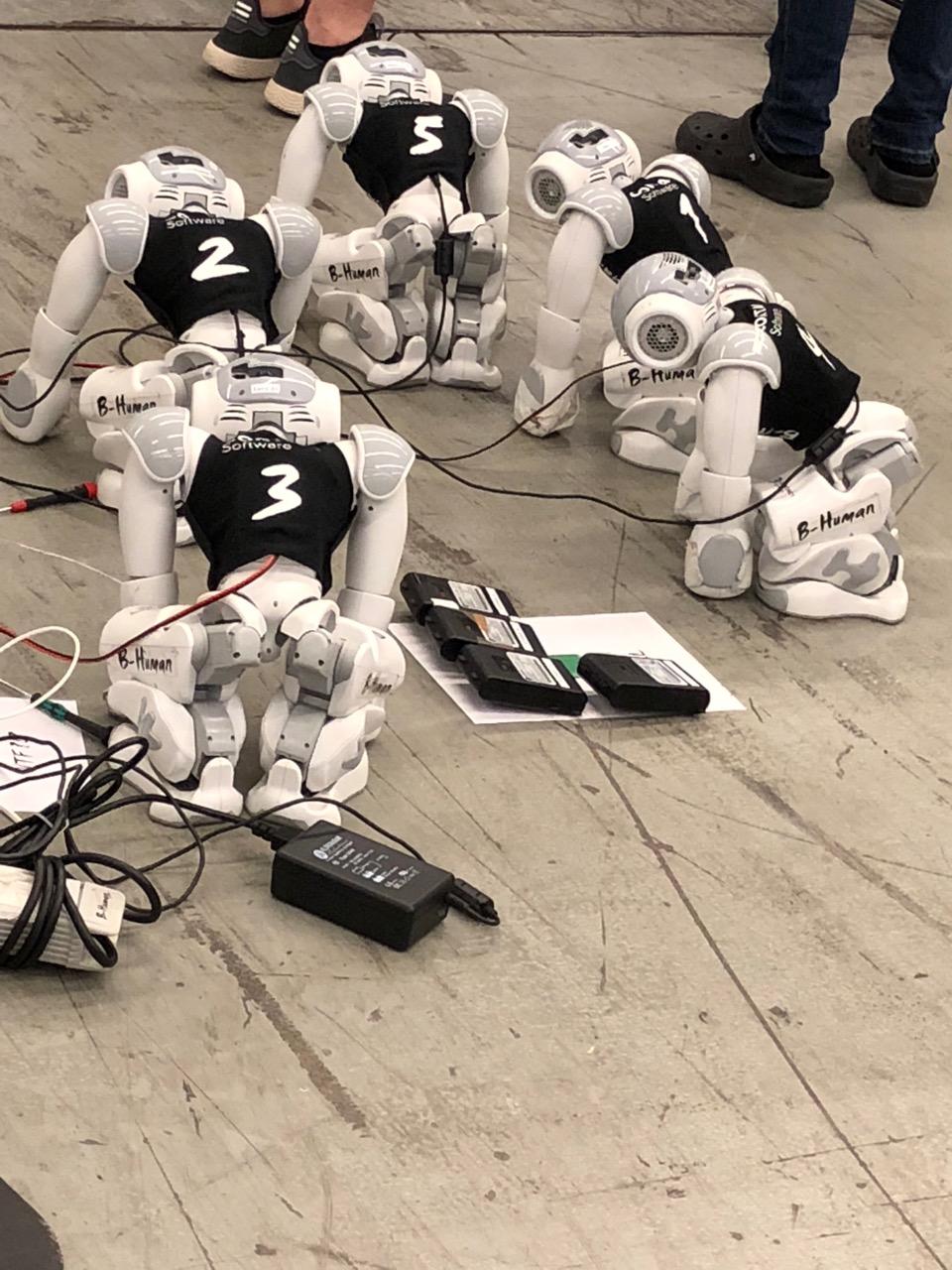

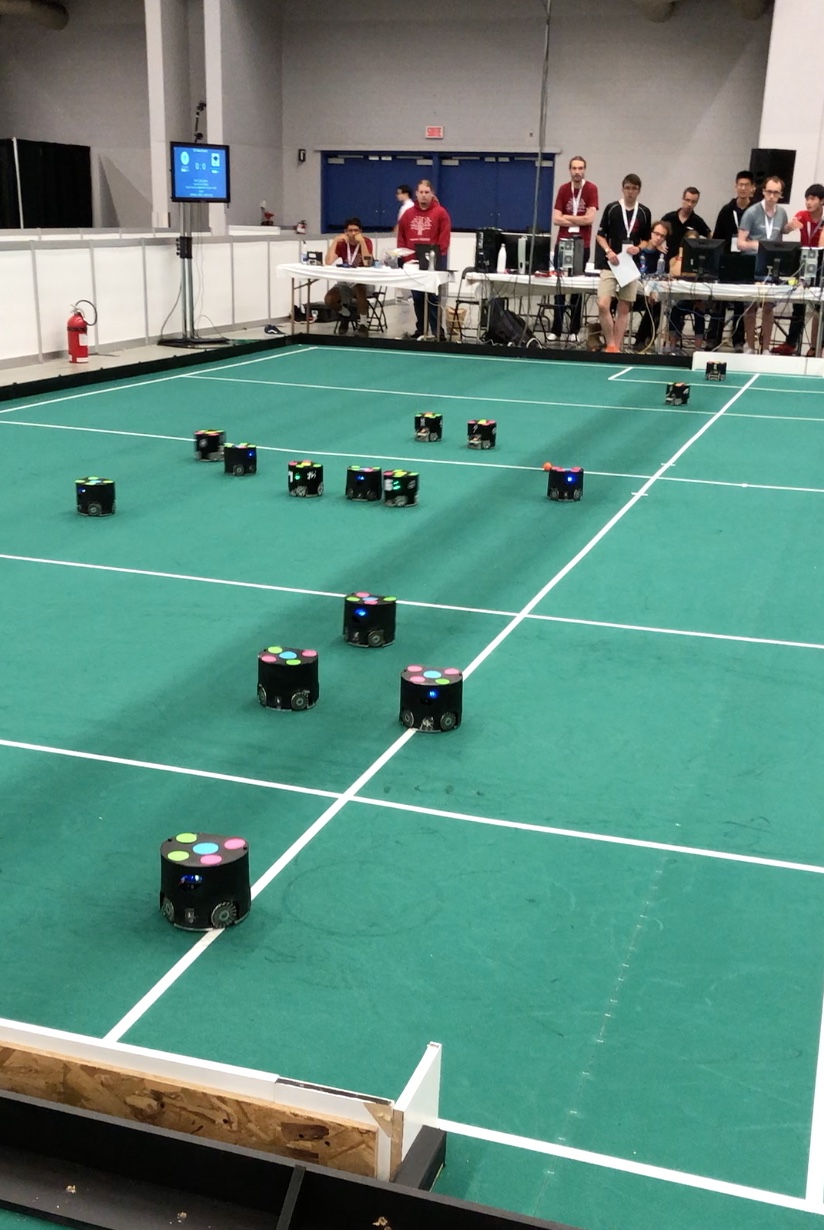

tickets early in the morning on Sunday June 17th, and the rest of the day went in team setup, practice runs and calibration & tuning the robotics programs.

tickets early in the morning on Sunday June 17th, and the rest of the day went in team setup, practice runs and calibration & tuning the robotics programs.

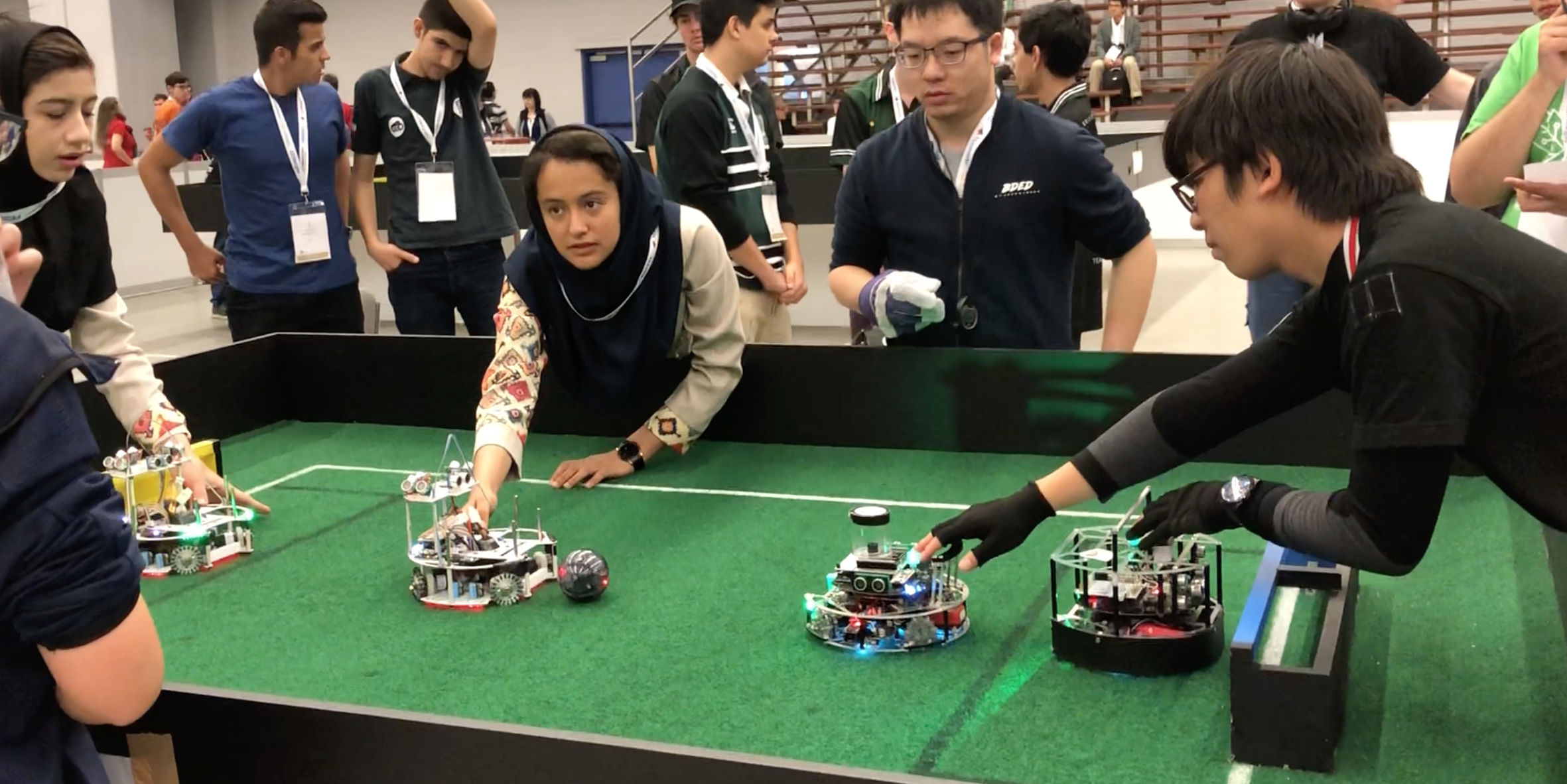

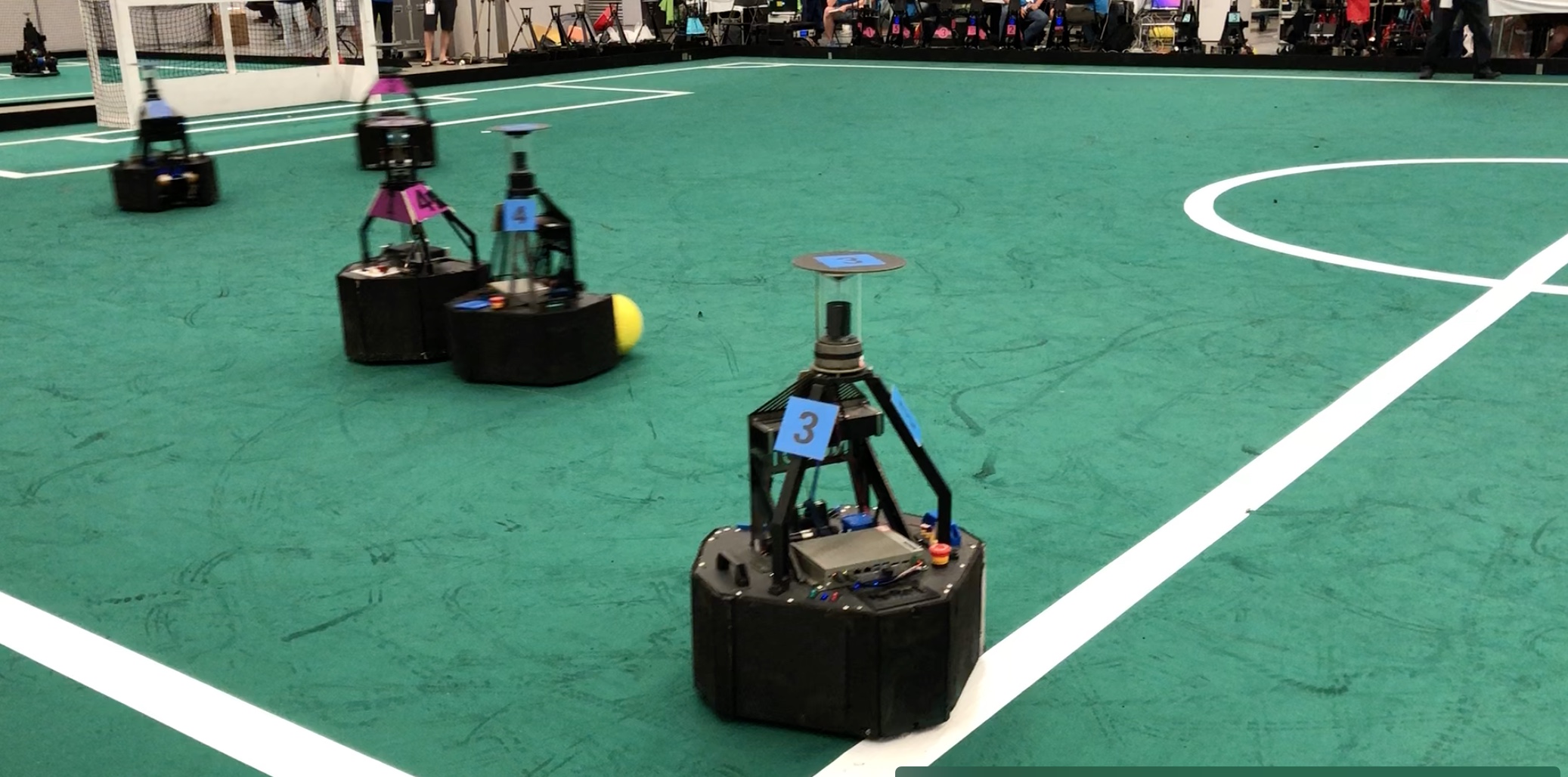

goal from one of the teams.

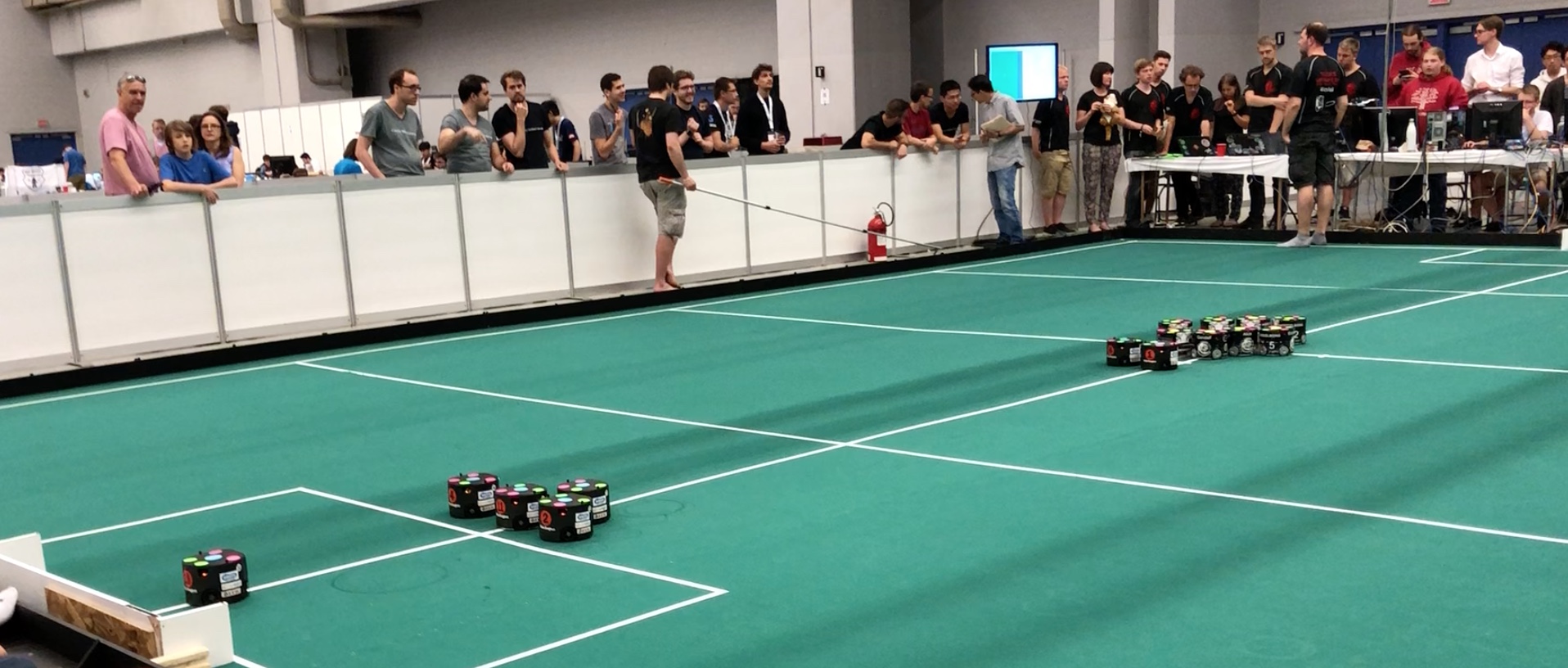

goal from one of the teams. A very fast paced soccer game with bots acting in coordination as one team against an opposing team. Here’s a video that shows how exciting this can be.

A very fast paced soccer game with bots acting in coordination as one team against an opposing team. Here’s a video that shows how exciting this can be.

There were a number of other home setting

There were a number of other home setting  challenges as well – for example unloading grocery bags and storing them in the right location/shelf in a home.

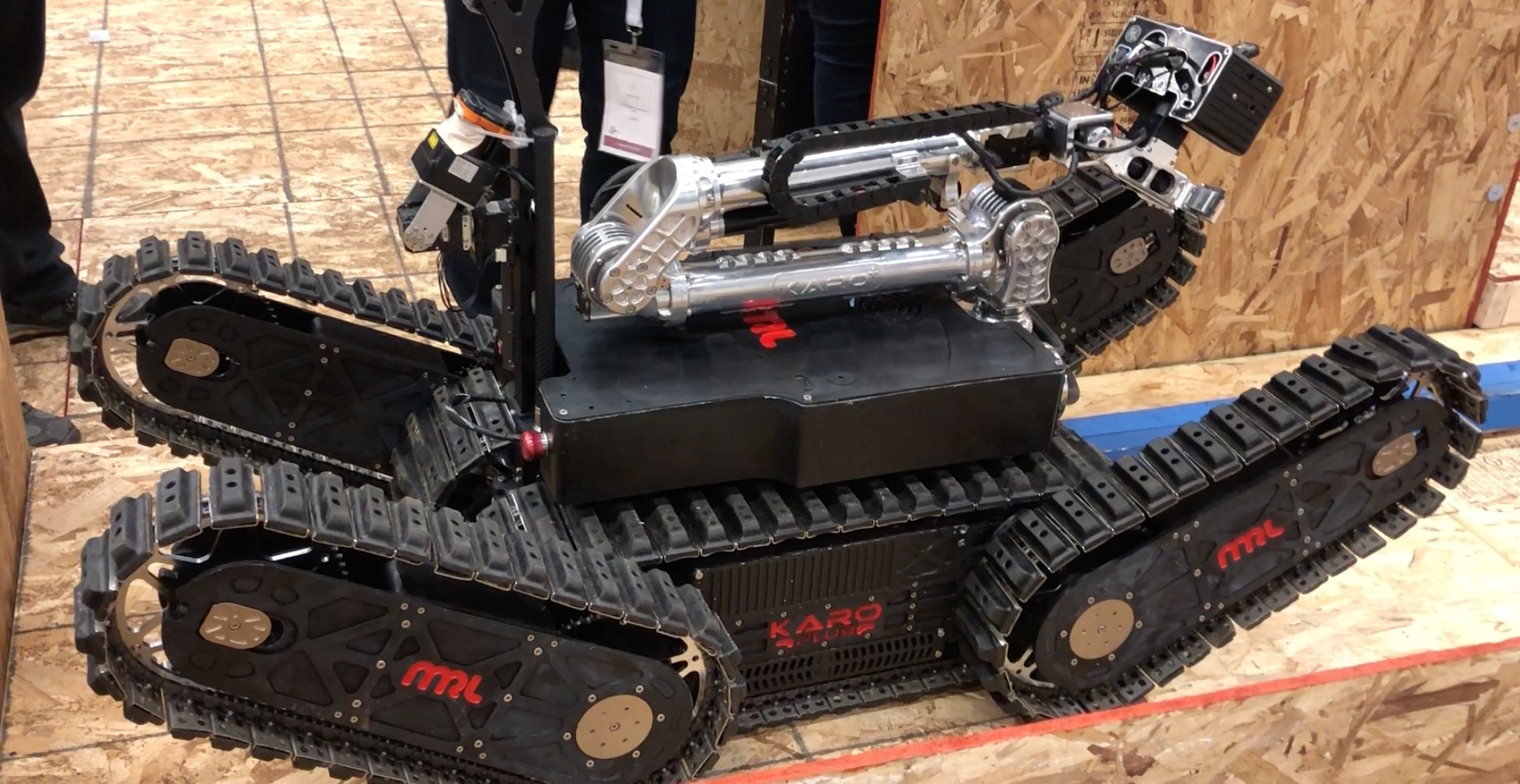

challenges as well – for example unloading grocery bags and storing them in the right location/shelf in a home. bots can be used in a hazardous situation for rescue. Challenges included navigating difficult terrains, opening doors, accomplishing tasks such as sensor readings, mapping a maze or path through the field etc. Some videos listed here are very impressive. These teams were mainly university and research lab teams. Do check out the following links.

bots can be used in a hazardous situation for rescue. Challenges included navigating difficult terrains, opening doors, accomplishing tasks such as sensor readings, mapping a maze or path through the field etc. Some videos listed here are very impressive. These teams were mainly university and research lab teams. Do check out the following links.

It has made huge investments into robotics. It will be evident from the following videos that making these investments is critical to succeeding in the near future when we expect a lot of the mundane work to be automated and mechanized.

It has made huge investments into robotics. It will be evident from the following videos that making these investments is critical to succeeding in the near future when we expect a lot of the mundane work to be automated and mechanized.

a huge difference in terms of resources and motivation from state sponsorship as was evident with the Iranian, Chinese, Russian, Singaporean, Croatian, Egyptian and Portuguese delegations.

a huge difference in terms of resources and motivation from state sponsorship as was evident with the Iranian, Chinese, Russian, Singaporean, Croatian, Egyptian and Portuguese delegations.

learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E.”

learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E.”![\[\theta=(X^TX)^{-1}X^Ty\]](https://eli.thegreenplace.net/images/math/20baabd9d33dcd26003bc44c7d81ba39e1ad4caa.png) ) Refers to a set of simultaneous equations involving experimental unknowns and derived from a large number of observation equations using least squares adjustments.

) Refers to a set of simultaneous equations involving experimental unknowns and derived from a large number of observation equations using least squares adjustments. brains (biological neural networks). Such systems learn the model coefficients by observing real life data and once tuned can be used in output predictions for unseen data or observations outside the training set.

brains (biological neural networks). Such systems learn the model coefficients by observing real life data and once tuned can be used in output predictions for unseen data or observations outside the training set.