Our analytics platform uses only corporate data stores and services only internal users and use cases. We chose to move it to the cloud. Our reasoning for doing this is as follows…

The usual perception amongst most enterprises is that cloud is risky, and when you have assets outside the enterprise perimeter, they are prone to be attacked. Given the mobility trends of our user population – both from the desire to access every workload from a mobile device that can be anywhere in the world as well as the BYOD trends, it is increasingly becoming clear that peripheral protection networks may not be the best solution given the large number of exceptions that have to be built into the firewalls to allow for these disparate use cases.

Here are some of the reasons why we chose otherwise:

- Cost: Running services in the cloud is less expensive. Shared resources, especially when you can match dissimilar loads would be much more efficient to utilize and therefore would cost less. It makes sense to go to a cloud provider, where many clients have loads that are very different and therefore it makes sense for them to share resources compute and memory, thus reducing the total size of hardware needed to manage their peak utilization. During my PBM/Pharmacy days, I remember us sizing our hardware with a 100% buffer to be able to handle peak load between Thanksgiving and Christmas. But that was so wasteful and what we needed was capacity on demand. We did some capacity on demand with the IBM z-series, but then again our workload wasn’t running and scaling on commodity hardware and therefore more expensive than a typical cloud offering.

- Flexibility: Easy transition to/from services instead of building and supporting legacy, monolithic apps.

- Mobility: Easier to support connected mobile devices and transition to zero trust security.

- Agility: New features/libraries available faster than in house, where libraries have to be reviewed, approved, compiled and then distributed before appdev can use them.

- Variety of compute loads: GPU/TPU available on demand. Last year when we were running some deep learning models, upon introducing PCA our processing came to a halt given the large feature set we needed to form principal components out of. We needed GPUs to speed up our number crunching, but we only had GPUs in lab/poc environments and not in production. Therefore we ended up splitting our job into smaller chunks to complete our training process. If we were on the cloud this would have been a very different story.

- Security: Seems like an oxymoron, but as I will explain later, cloud deployment is more secure than deploying an app/service within the enterprise perim.

- Risk/Availability: Hybrid cloud workloads offer both risk mitigation and high availability/resilience.

Let’s consider the security aspect:

The cost of maintaining and patching a periphery based enterprise network is expensive and risky. One wrong firewall rule or access exception can jeopardize the entire enterprise. Consider the following:

A typical APT (Advanced Persistent Threat) has the following attack phases:

- Reconnaissance & Weaponization

- Delivery

- Initial Intrusion

- Command and Control

- Lateral Movement

- Data Ex-filteration

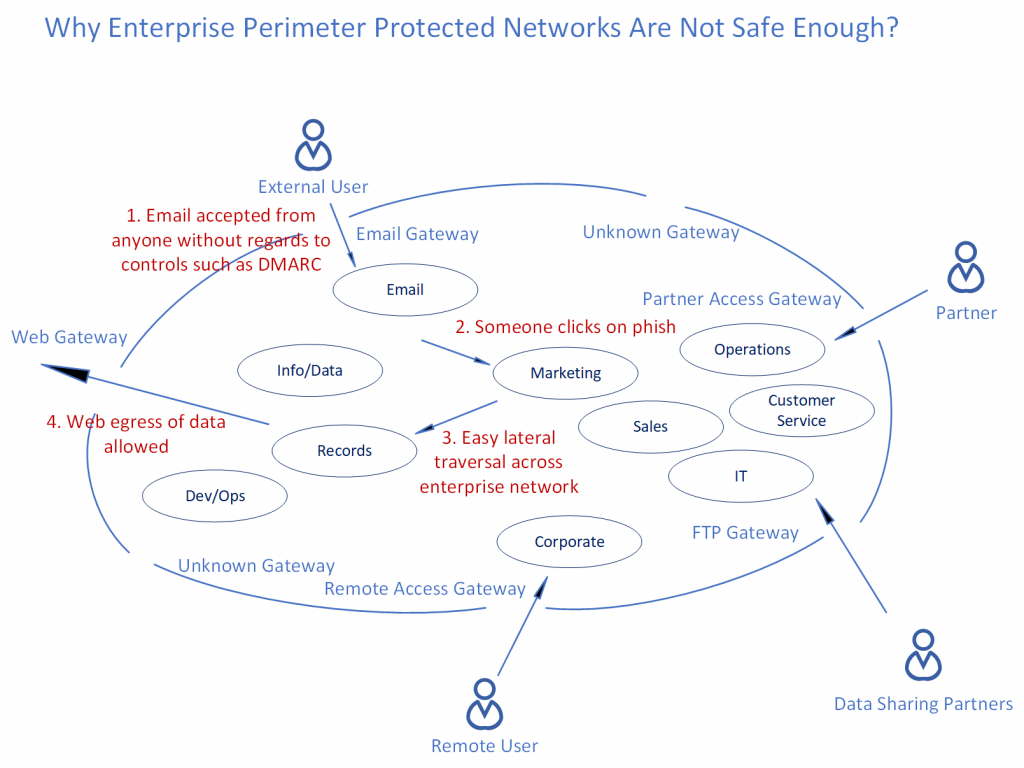

An enterprise usually allows for all incoming external email. Usually most organizations do not implement email authentication controls such as DMARC (Domain-based Message Authentication, Reporting & Conformance). You would also have IT systems that implement incoming file transfers, so some kind of an FTP gateway would be provided for. You also see third party vendors/suppliers coming in through a partner access gateway. Then you also have to account for users within the company that are remote and need to access firm resources remotely to do their job. That would mean a remote access gateway. You also do want to allow employees and consultants that are within the enterprise perim , to be able to access most websites and content outside implying you would have to provision for a web gateway. There would also be other unknown gateways that had been opened up in the past, that no one documented and now people are hesitant to remove those rules from the firewall since they do not know who is using it. Given all these holes built in to the enterprise perim, imagine how easy it is allow for web egress of confidential data, through even a simple misconfiguration of any of these gateways. People managing the enterprise perim have to be right every time while the threat actors just need one slip up.

As you can tell from the above diagram, the following sequence could result in a perimeter breach:

- Email accepted from anyone without regard to controls such as DMARC.

- Someone internal to the perimeter clicks on the phish and downloads malware on their own machine.

- Easy lateral traversal across the enterprise LAN, since most nodes within the perimeter trust each other.

- Web egress of sensitive data allowed through a web gateway.

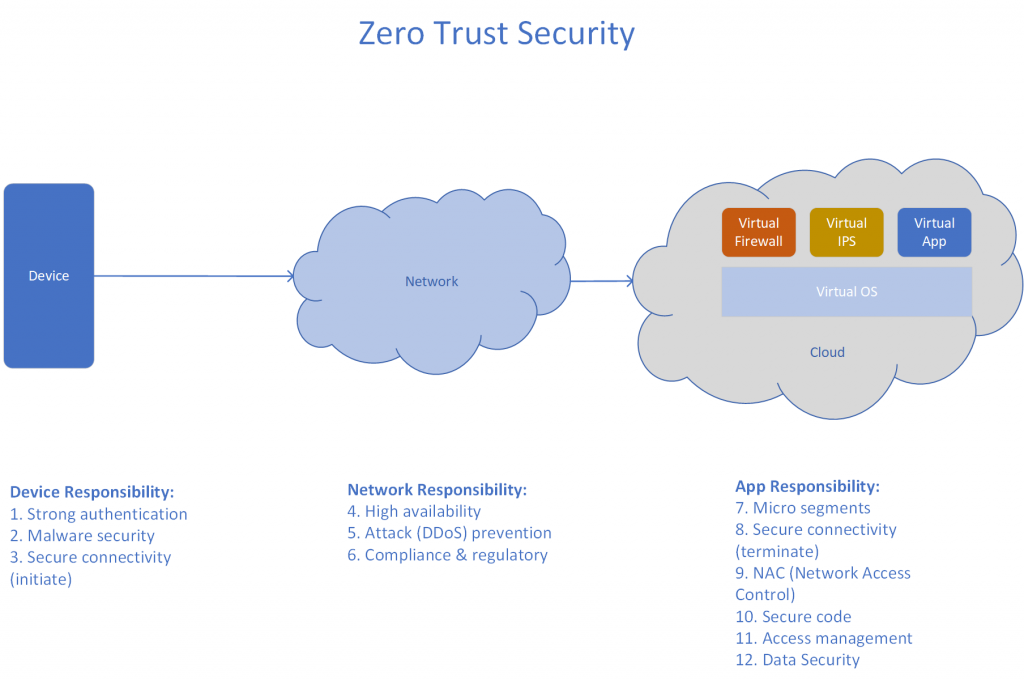

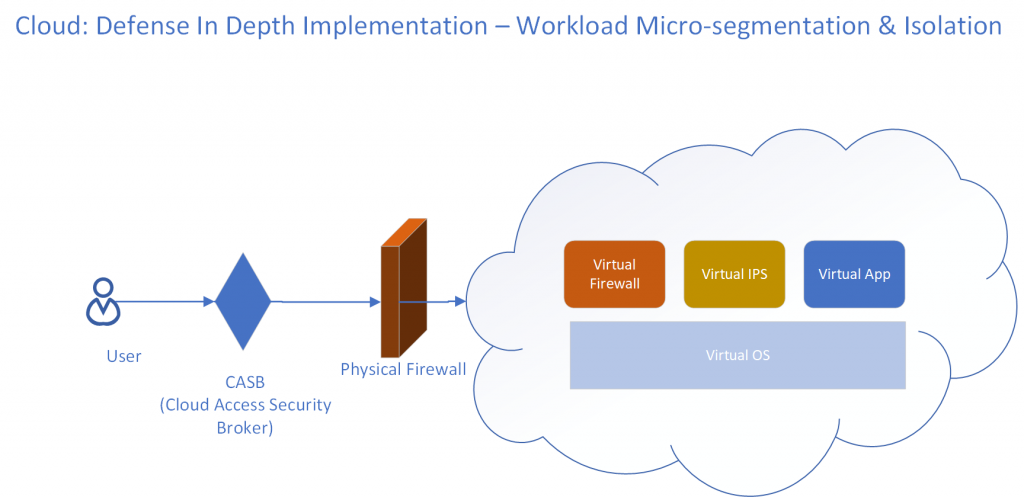

On the other hand consider a Micro-Segmented Cloud workload which provides multi layer protection through DID (defense in depth). Since the workload is very specific, the rules on the physical firewall as well as in the virtual firewall or IPS (Intrusion Protection System) are very simple and only need to account for access allowed for this workload. Additional changes in the enterprise does not result in these rules being changed. So distributed workload through micro-segmentation and isolation actually reduces risk of a misconfiguration or a data breach.

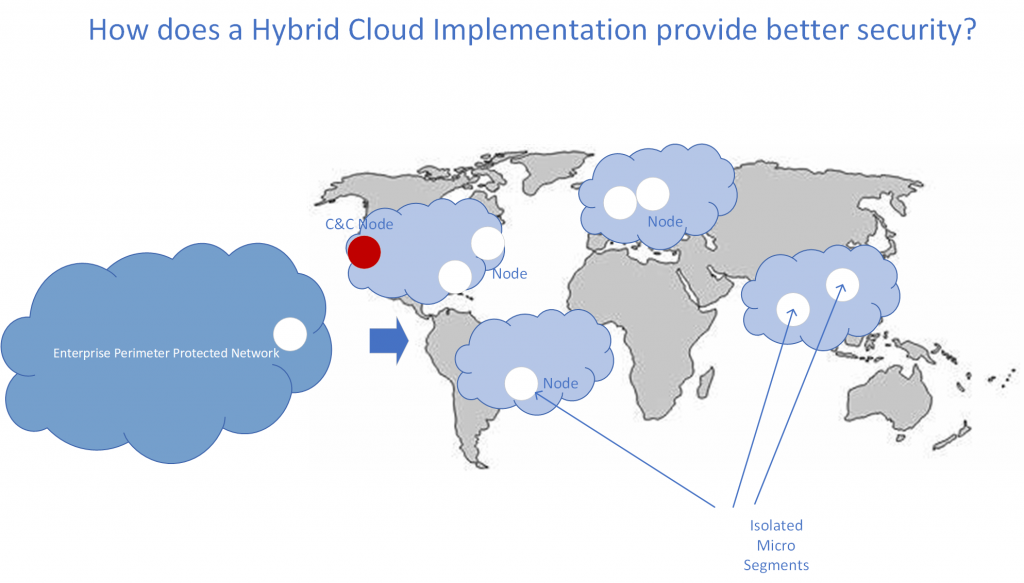

Another key aspect to consider is the distribution of nodes. Looking at botnets, they have an interesting C&C (Command and Control) design; if the C&C node is knocked out, another node can assume the C&C function and the botnet continues its work attacking. Similarly cloud workloads can also implement the same C&C structure and if one of the C&C nodes is victim to a DoS (Denial of Service) attack, another can assume its role. Unlike an enterprise perimeter, a cloud deployment does not have a single attack surface and associated single point of failure, and a successful DoS attack does not mean that all assets/capabilities are neutralized, rather other nodes take over and continue to function seamlessly providing high availability.

It’s time to embrace the micro segmented cloud native architecture where a tested and certified set of risk controls are applied to every cloud workload. The uniformity of such controls, also allow risks to be managed much easier than the periphery protection schemes that most enterprises put around themselves.

Another important trend that enterprises should look to implement is zero trust security. The following diagram represents the responsibilities for each layer/component when implementing zero trust security. A very good writeup from Ed Amaroso on how zero trust security works is here.